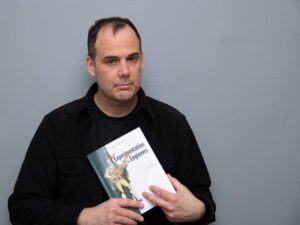

Artificial Intelligence Instructor David Sweet holds a copy of his recently published book Experimentation for Engineers.

Artificial Intelligence Instructor David Sweet holds a copy of his recently published book Experimentation for Engineers.By Dave DeFusco

You’re scrolling through your Twitter feed and you see something new. Maybe it’s a section called “For You” that curates content based on your previous activity, or it’s “Views” that tells you how many times your tweets have been viewed. If you asked Katz School AI Instructor David Sweet, who has written a new book called Experimentation for Engineers, he’d say that behind the scenes, Twitter’s systems engineers are testing new features to optimize an objective for improving the app’s user experience, boosting ad placement or generating more revenue.

“Maybe you'll be in the A group or you'll be in the B group—the group with the old option and the group with the new option,” said Sweet, referring to A/B testing, a common experiment used in business. “What they're doing is comparing how this change influences your behavior.”

A/B testing is a process wherein two or more versions of a variable, such as a web page or Twitter feed, are shown to different segments of users at the same time to determine which version has the highest quality. A/B testing eliminates the guesswork out of website and social media optimization and enables companies to make data-backed decisions.

In A/B testing, A refers to “control” or the original testing variable, and B refers to “variation” or a new version of the original testing variable. In the case of Twitter, Sweet said engineers are trying to determine whether users spend more time on the platform or less time, and whether they engage more or less with the things they see.

“What they might be measuring is the time you spend looking at the tweets that they show you or whether you scroll past them, indicating that you don't like them,” he said. “So they’ll try to optimize the time you spend looking at the tweets or minimize the number of tweets you scrolled past.”

Sweet’s book, whose full title is Experimentation for Engineers: From A/B Testing to Bayesian Optimization, was published by Manning in January. It was written for engineers who work with software, either designing financial trading systems or internet-based systems, where continuous upgrades are necessary to stay competitive.

If the changes Twitter engineers introduce, such as the Views counter, influence user behavior positively, they'll keep them and that then completes the cycle. If they influence user behavior negatively—for instance, you closed the app because you don't like it—they’ll take the button away and the process will start over again with the next proposed change.

“The A/B testing becomes kind of like a social science experiment because they're interacting with people,” said Sweet. “Most of the changes being made behind the scenes are to the algorithms that determine which tweets you see.”

The book also delves into the cost of running experiments for companies such as Twitter. Experiments take time to run, they take effort and they take risk. By deploying an innovation that ultimately doesn't work, companies risk losing money and users.

“The question is how many tweets am I going to show you before I finally display an ad,” he said. “If I show you an ad right away, I might turn you off because you’ll think Twitter is all ads and you’ll lose interest in it. But maybe you'd be willing to put up with an ad after looking at five tweets because you had a good experience. To get to that place, it takes more testing, but that costs money. It’s a juggling act.”

Sweet said he incorporated into his book a range of experimental methods that he’s learned over the years, including A/B testing; response surface modeling, which is a collection of mathematical and statistical techniques whose purpose is to analyze a set of problems using an empirical model; multi-armed bandits, a problem in which limited resources must be allocated between competing, or alternative, choices in a way that maximizes their expected gain; and Bayesian optimization, which brings all of these ideas together into a single experimentation approach.

“I thought it'd be easier to write a book for me if I were a student 10 or 20 years ago and I wanted to understand all of this as efficiently as possible,” he said. “It would be better to learn them all together as one topic and see how they all build on each other.”

He said Katz School students, who take his elective course Experimental Optimization, will be expected to know about experimentation methods if they go to work for major companies, like Twitter, Spotify, Meta, Google or LinkedIn. “What the book is giving them is a concise way to learn all this,” said Sweet. “These companies use these methods on a regular basis.”